New products from smart sensors to microcontrollers are making it easier than ever to design built-in AI at the edge.

The choice between putting AI on the edge or in the cloud is a tradeoff among numerous factors. Sometimes the answer is obvious, such as an autonomous vehicle that clearly has to make decisions on the spot; latency makes cloud-based AI a non-option for AVs. Likewise, a security camera doesn’t need to record events every time it sees motion—instead, it could employ edge-based AI to perform facial recognition and only record when it sees a human.

When bandwidth, security and latency are issues, it’s time to put AI right where the action is. Fortunately, engineers have a slew of tools and products to enable more AI on the edge. These systems may be implemented by smart sensors, microcontrollers or even FPGAs, often for just a few dollars per unit. On the software side, tools such as TensorFlow can help engineers develop and train AI networks. Many “baseline” AI software modules are available in the public domain; these provide a starting point for applications like speech and image recognition, which engineers can easily tailor to a particular application.

Here are some of the many solutions now available to engineers eager to implement AI at the edge.

Sensors with AI

There are plenty of sensors that enable AI at the edge. For example, California-based Useful Sensors offers three edge-based AI products. One is a portable QR code scanner, the Tiny Code Reader, which interprets QR codes and sends the data through an I2C interface. It’s designed to be used with single-board computers like Raspberry Pi and Arduino. The Tiny Code Reader developer’s guide includes example Python code and tutorials.

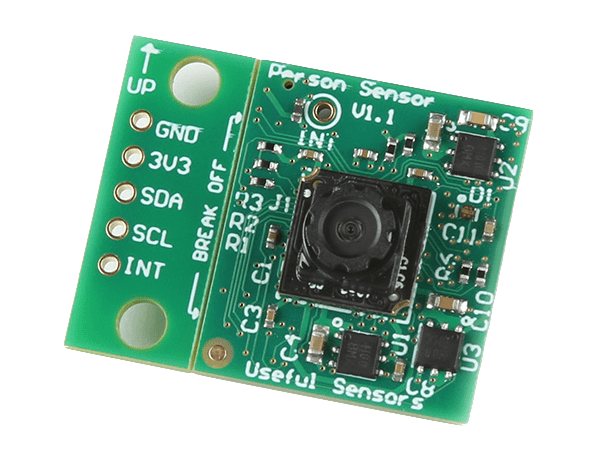

Need a low-cost facial identification system? Useful Sensors offers the Person Sensor, a camera module with a built-in microcontroller running an AI algorithm that detects and identifies human faces. It can count the number of faces detected and, under certain conditions, identify up to eight individual faces. The unit draws around 150 mW, includes an I2C interface, measures 19.3 x 22 mm, and sells for less than $10.

Useful Sensors offers a developer’s guide that includes examples of code that perform facial detection and location. Facial recognition with the device is still experimental, but one user taught a Person Sensor to recognize the last eight U.S. presidents by showing it pictures from the internet. After training, it correctly identified all of their pictures, although they were the same photos that were used in training. It would be interesting to know whether it would be as accurate with other images of the same presidents.

Useful Sensors also developed AI in a Box, a tabletop unit that’s capable of carrying on a conversation and performing other speech-to-text operations using a locally-run large language model (LLM). It’s not connected to the Internet and has no wireless capabilities, so all conversations are confidential.

AI in a Box can perform closed-captioning on-the-spot. As it recognizes speech, it sends the equivalent text through its built-in HDMI port. A USB port allows it to communicate with other devices. For example, in a home automation system, AI in a Box can interpret a command (e.g., “Turn on the living room light”) and send the appropriate digital command to a controller. It can also perform language translation, with text-based output showing the original speech and the translated text.

AI-based microcontrollers

The most common edge-based AI systems include microcontrollers that perform AI and ML algorithms. They’re affordable, compact and feature-rich, providing designers with abundant processing power in a small space at a low cost. Many have very lean power requirements, making them suitable for battery-powered products. Embedded microcontrollers can perform many AI functions required of smart sensors, such as image and voice recognition.

Single-board computers (SBCs) such as Raspberry Pi and Arduino are more than capable of performing AI on the edge, but their cost is quite high compared to microcontrollers. Granted, SBCs have built-in features that shorten development time—but the price tag makes them unsuitable for many edge-based AI applications, especially those that will be mass produced. For hobbyists, one-off designs and low-volume products, SBCs are fine, but for one-tenth of the cost, an engineer can use a microcontroller (often the same one that’s at the heart of many SBCs).

Some of these microcontroller-based “TinyML” systems can perform voice and facial recognition and even natural language processing. At the low end, the actual training may take place on larger computers, but a high-end microcontroller can handle both the training and the implementation. Most support a wide range of wired and wireless interfaces, security features and expansion capabilities, and draw just a few milliwatts of power.

When training is done externally by a more powerful CPU, an inference engine like TensorFlow Lite will fit the teaching models into the microcontroller’s available memory.

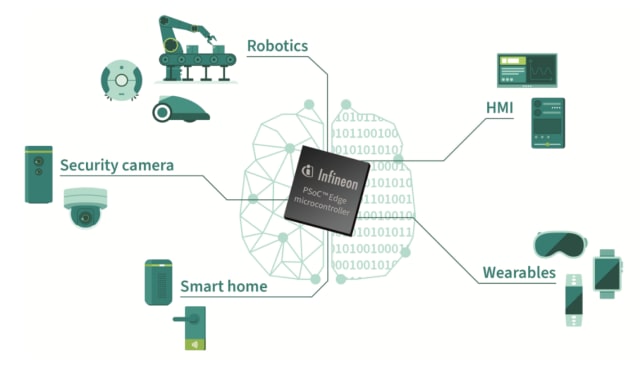

Until recently, neural network processing has been the domain of powerful cloud-based computers, but microcontrollers with ML acceleration are capable of performing many advanced AI tasks on the edge. For example, Infineon’s PSoC Edge microcontrollers include ML acceleration for neural network applications. Most also come with software suites such as ModusToolbox, which includes a development environment, a code library and runtime assets, and Imagimob’s Studio, a tool for managing data and building ML models. The PSoC Edge device includes an Arm Cortex-M55 processor featuring ARM’s Helium technology for DSP and ML applications, as well as an integrated numeric processing unit designed to accelerate ML inference on embedded devices.

Renesas’ RA8 microcontroller series was also designed for edge-based AI applications. It features AI/ML hardware acceleration and ARM’s TrustZone hardware-level security. The RA8 series supports floating point operations as well as scalar and vector instructions. Designers can build and program prototypes with the EK-RA8M1 evaluation kit.

Analog Devices’ MAX78000 is optimized for image and video recognition, thanks to its audio/video interfaces, floating-point unit and neural network capabilities that support multilayer perceptron, convolutional neural networks and recurrent neural networks (MLP, CNN and RNN, respectively). Its applications include object identification, audio processing, noise cancellation, facial recognition, time-series analysis, health monitoring and predictive maintenance. The MAX78000 is optimized for battery-powered applications, drawing just a few milliwatts in active mode, and offering various low-power modes based on which chip features are needed.

FPGAs with AI

Traditionally, AI has been implemented as a software solution, but AI programming algorithms merely emulate the human thought process. The brain is strictly hardware, and in a similar vein, AI can be implemented in hardware using FPGAs. Although microcontrollers are getting faster, FPGAs avoid software latency altogether by putting all features on the silicon. Applications such as speech and image recognition can be “programmed” directly into the hardware. Parallel processing can be implemented on FPGAs by dedicating a section of the chip to a particular process. This can reduce power consumption and possibly decrease the parts inventory and circuit footprint.

Sensor fusion—the combining of multiple sensor outputs, weighing each based on certain conditions, and reaching a logical conclusion—is a perfect use for parallel processing. In applications such as autonomous vehicles, which employ lidar, radar, ultrasonic and vision sensors, low latency is critical. FPGAs fit the bill quite effectively.

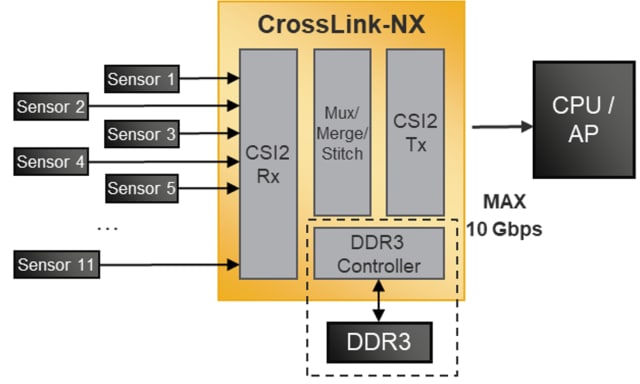

The Lattice CrossLink-NX FPGA family was designed specifically for edge-based vision processing and sensor fusion applications, such as aggregating data from multiple sensors and drawing conclusions. In addition to autonomous vehicles, sensor fusion lends itself to industrial applications like predictive maintenance, by outfitting the machine with an array of sensors and continually looking for anomalies that could suggest an impending failure. Sporting up to 39k logic cells, the Crosslink-NX series also includes DSPs, memory and a variety of interfacing options, including gigabit Ethernet and PCIe, among others. Its small footprint and low power consumption make it suitable for edge processing. Lattice offers several evaluation boards and accessories, as well as a full development suite. The company also provides pre-written “core” software modules for dozens of AI applications. These modules can be freely incorporated into user designs and tweaked to match the particular application’s specs.

More edge AI applications

As Moore’s Trend (it’s not really a law) continues to crank up the power and cut down the price of hardware, more edge-based AI applications are appearing. For example, Innoviz has introduced the InnovizTwo, a high-performance, automotive-grade lidar sensor with built-in AI. The company says the InnovizTwo enables safe L2+ driving capabilities, with an eye toward L3 (conditional automation) driving. The product features InnovizCore, a system-on-a-chip (SoC) for computer vision algorithms and real-time driving decisions. Perception software processes the raw point cloud data from each lidar sensor and, via sensor fusion, performs object and obstacle detection, classification, tracking, lane sensing and landmark identification for mapping. It also provides support for AUTOSAR (AUTomotive Open System ARchitecture) and ISO 26262 (AV safety requirements). As the InnovizTwo gains traction, automobile manufacturers will be able to collect performance data, allowing Innoviz to improve its ML models.

Room occupancy sensors are used in security and building automation systems, and usually rely on passive infrared detectors, ultrasonic sensors and smart cameras. But many of those areas have Wi-Fi routers and gateways that employ multiple-input, multiple-output (MIMO) beamforming, in which several subcarriers are used to maximize the bandwidth of a base frequency. Monitoring variables such as the strength, phase and frequency of various reflected Wi-Fi signals gives the channel state information (CSI); when the CSI changes, the system can analyze the changes to detect objects in the room and sense those that are moving. How does one combine all that information to form a conclusion? If you guessed sensor fusion and AI at the edge, then you’ve been paying attention!

As the above examples show, there’s a multitude of edge-based applications ripe for the picking, and now you have the products and tools to implement them. Now get to work on that next design—and use tools that help you work smarter, not harder!