Here’s what engineers need to know about using generative models in design.

Generative artificial intelligence (AI), also known as GenAI, has been at the forefront of the AI boom that began in the early 2020s. However, as with much of the field of artificial intelligence, the basis for the technology is significantly older. It goes all the way back to Andrey Markov, the Russian mathematician who developed a stochastic process known as a Markov chain to model natural language.

However, while the theoretical basis of generative AI can be traced back to advances in statistics in the early 20th century, the technology that makes it possible is much more recent. This combination of complex mathematics and novel technology tends to obscure GenAI’s actual capabilities, resulting in underestimations or, more often, overestimations of what it can do. Hence, for engineers likely to see more and more references to generative artificial intelligence in the coming years, it’s worth settling some basic questions.

What’s the difference between generative AI and generative design?

There’s an unfortunate tendency among industry professionals to use ‘generative design’ and ‘generative AI’ interchangeably, largely driven by the current marketing hype surrounding artificial intelligence. However, there are important differences between these two terms, specifically in how their underlying mechanisms operate.

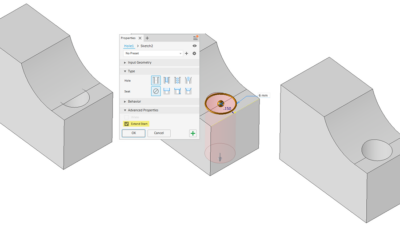

Generative design uses parametric rules to define and execute a procedure through iteration. For example, using generative design to create a load-bearing strut would involve setting the relevant limits on materials, dimensions, and so on, resulting in a variety of designs that can be further modified manually.

In contrast, generative AI uses statistical model weights for training and content generation. Using generative AI to create a load-bearing strut would involve training a model toward a defined goal using previous strut designs, resulting in one or more designs that would, ideally, combine the optimal features of previous efforts into something novel.

At the time of writing, generative design is far more common in engineering than generative AI, which has seen the most success in the areas of natural language processing and generating digital content, primarily images and text. Nevertheless, given the widespread interest and significant investment in generative AI across industries, it’s likely that engineers will see more of it in their jobs in the years to come.

How does generative AI work?

At its core, generative AI is about machine learning, specifically applying unsupervised or self-supervised learning to a data set. Broadly speaking, GenAI systems can be classified in terms of their modality and whether they’re unimodal (accepting only one type of input, such as text) or multimodal (accepting multiple types of input, such as images and text).

In each case, the system is trained on relevant examples in the appropriate modality—words, sentences, images, and so on—and, with enough examples, the system eventually recognizes patterns that enable it to discriminate between examples as well as generate its own. In the case of multimodal systems, these patterns include correlations between the various modalities that enable it to translate between them.

Originally, these results were generally achieved via a general adversarial network (GAN), in which one model attempts to create novel data based on its training data while another attempts to discriminate the generated data from the real thing. The better the former model performs, the closer the system’s outputs are to the desired results.

More recently, transformers have emerged as a popular alternative architecture to GANs. They’re primarily used for processing sequential data, such as in natural language processing, by using self-attention mechanisms to identify dependencies between items across the entire sequence. This approach has proven to be significantly more scalable, resulting in the rise of ChatGPT and related AI tools.

What can engineers do with generative AI?

As indicated above, generative AI is much less likely to be practically useful for engineering compared to generative design, at least in GenAI’s current state. However, there are already examples of generative AI being used to automate part of the 3D modeling process by converting 2D images into 3D models. One example, NeROIC, (Neural Rendering of Objects from Online Image Collections), uses a neural network to generate 3D models from online images of common objects. Given the rate at which this technology seems to be advancing, it’s not hard to imagine a whole host of GenAI tools that could improve CAD workflows.

Another potential application for generative AI in engineering is the development of synthetic data for simulation and validation. The advantage of synthetic data is that it can be an alternative to data produced by real-world events that may be rare and/or undesirable, such as natural disasters or catastrophic system failures. Of course, users must be wary of the potential for implicit biases and ensure that any synthetic data produced with GenAI is representative of the real-world data distribution.

Many other use cases for generative AI in engineering are likely to emerge as the technology continues to develop and scale. Automatically generating documentation or snippets of code and identifying anomalous patterns in production data for predictive maintenance and quality control are just a few of the potential engineering applications for generative AI.