The latest generation of engineering computers features new neural processing units (NPUs) to give AI a computational boost—but not all users will benefit equally.

Your computer may be personal, but that’s no longer enough. PCs are the latest convert in the spread of artificial intelligence (AI), and they’ve rebranded as AI PCs.

It’s not entirely a marketing buzzword. AI PCs, which are now being offered by major PC makers including Dell and Hewlett-Packard, have at least one new part to justify the new name: the neural processing unit, or NPU. Coexisting alongside the CPU—or integrated within it—and complementing the AI-friendly GPU, the NPU is meant to accelerate the machine learning calculations that are becoming increasingly important to modern software.

Engineering software is no exception. CAD and simulation providers have been testing the waters of AI, and it seems inevitable that it will play an increasingly prevalent role for engineers, architects and other professional users.

So, should engineers rush out to buy a new, NPU-equipped AI PC, or wait for the NPU to mature? Here’s what you need to know about the emerging tech and where it can make an impact.

CPU, GPU and NPU: The evolution of computing processors

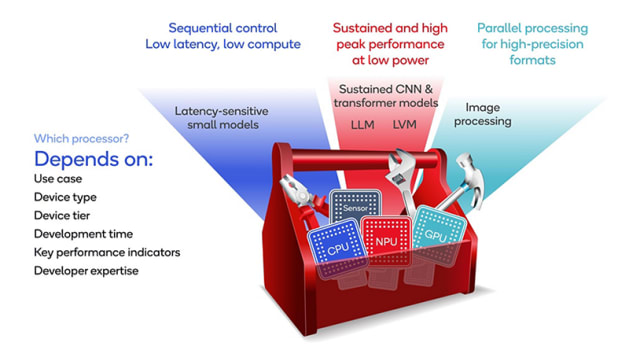

All computers are built around a central processing unit (CPU) that can handle a wide variety of instructions sequentially. Over time, CPU chips have become faster and more powerful. However, our appetite for crunching numbers grew and continues to grow even faster.

Chip designers responded by offloading specific CPU-intensive instructions, such as graphics and video rendering, to a second processor called a graphics processing unit (GPU). Through parallel processing, GPUs significantly improved the performance of graphics and computational tasks in demanding applications such as simulation and 3D rendering.

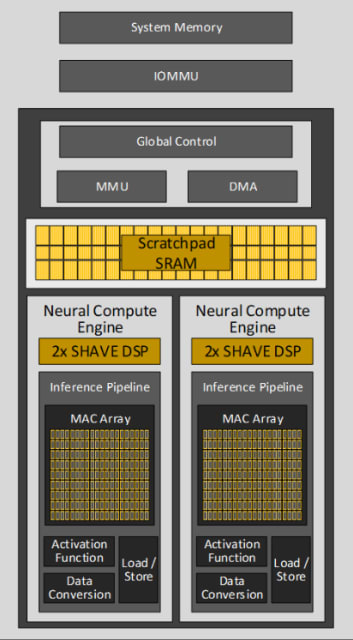

The recent advent of AI software dramatically increased the demand for processing data yet again. This time, chip designers have responded by offloading more CPU-intensive instructions, such as the mathematics for neural networks, to a third processor called a neural processing unit (NPU). The NPU specializes in AI computations such as matrix multiplication and convolution.

How do NPUs accelerate AI applications?

Unlike CPUs that sequentially process instructions, NPUs are optimized for parallel computing, making them highly efficient at machine learning algorithms, massive multimedia data transformation and neural network operations.

NPUs can achieve significant performance improvements for certain instructions compared to CPUs and GPUs. Intel, for instance, claims that its new NPU-equipped CPU achieves 1.7 times more generative AI performance compared to the previous generation chip without an NPU. The extent of performance improvement depends on many factors, including how well the application software is optimized to take advantage of the NPU, but the potential for speedup isn’t the only benefit: reassigning instructions to the NPU can also reduce power consumption and improve device battery life. The same Intel benchmark revealed a 38% power reduction on Zoom calls thanks to NPU offloading.

Major chipmakers have developed their own versions of NPUs meant to accelerate AI applications on a wide variety of devices. While the NPU architectures vary along with their speed, processing capacity, power consumption, thermal performance and other characteristics, these neural processors all share the goal of improving AI and machine learning performance. Apple’s custom A-series and M-series processors, which power its Mac computers as well as iPhones and iPads, include an NPU that Apple calls the Neural Engine. AMD’s Ryzen 7040 and 8040 series processors include NPUs based on the chipmaker’s XDNA architecture. Qualcomm’s Hexagon NPU brings AI acceleration to its mobile Snapdragon SoCs.

The list goes on, but for engineering users the most significant NPU may be Intel’s. The chipmaker has integrated an NPU into its latest generation Intel Core Ultra processors, which power most of the new generation of engineering workstations. Intel says that its partnered with more than 100 independent software vendors (ISVs) for AI PC optimization, with more than 300 AI-accelerated features to come throughout 2024. Unfortunately, no engineering ISVs are listed as partners on Intel’s website—not yet, anyways.

Should engineers upgrade to an AI PC?

Engineers already ready for a workstation upgrade have no reason to avoid the latest generation of AI PCs, coming as they do with all the standard generational improvements. However, at this point the NPU itself is not a reason for engineers to upgrade. There isn’t yet enough AI incorporated in popular engineering software to make an appreciable difference for those workloads. But that could change, and quickly.

As with CPUs and GPUs before them, it’s only natural to assume that NPUs will evolve in capability as their utility increases. Predicting the future is always tricky, but a few trends are visible in the future of AI-related software that will drive NPU hardware developments.

CPU designs and architectures are approaching various limits. This suggests that GPUs and NPUs will experience most of the advances needed to keep pace with AI improvements. Advances in machine learning algorithms will likely reduce the demand for computing resources, but increases in model complexity may overshadow the efficiency gains. NPUs could serve a key role in making up the difference.

As AI features become increasingly popular in applications, NPUs may become essential to everyone’s PC—even engineers.