Here’s how AR is being used to build NASA’s Orion spacecraft and more.

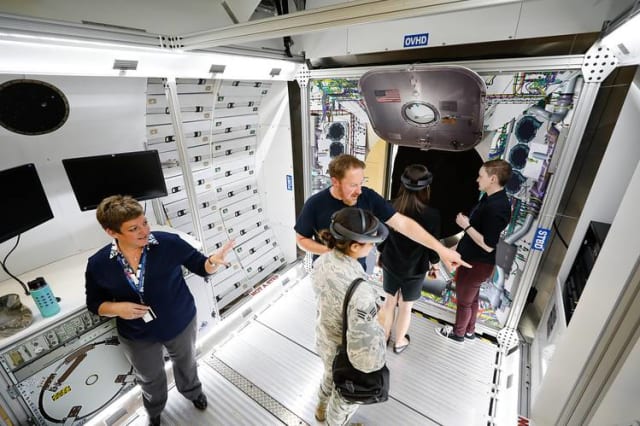

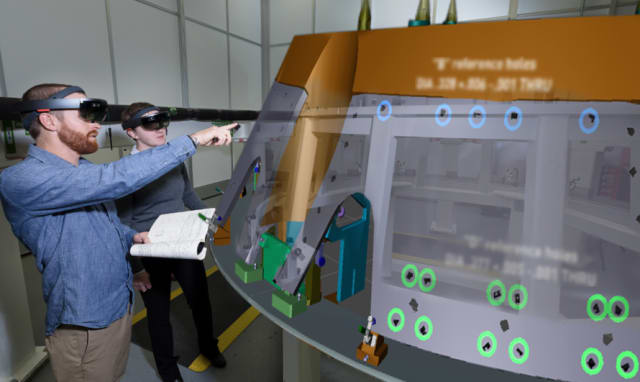

Lockheed Martin is famous for engineering innovation, dating back to the legendary Skunkworks. Today, the defense contractor is making use of innovative augmented reality technology in their manufacturing process and across entire product lifecycles. Lockheed Martin’s AR project began in the Space Systems division, for example in assembly and quality processes for NASA’s Orion Spacecraft, but has been so successful that the company has deployed the Microsoft Hololens hardware and Scope AR software in other divisions, namely Aeronautics, Missiles and Fire Control, and Rotary and Mission Systems. The company may even send Hololens to space on crewed missions to support training and maintenance tasks.

To find out more about what Lockheed Martin is doing with AR, engineering.com spoke to Shelley Peterson, Principal Investigator for Augmented and Mixed Reality for Lockheed Martin Space Systems, and Scott Montgomerie, co-founder and CEO of Scope AR, the AR software Lockheed Martin is using. Check out our conversation below.

Why did Lockheed Martin start using augmented reality?

SP: The way that our spacecraft are built involves drawings, models, and lots of data that has to be interpreted. For space, we’re often building a small number of spacecrafts. We have programs that have higher volumes, but in many cases, we’re interpreting data in almost every build. And that interpretation takes time. When you can place data spatially, there’s just a significant advantage. It removes so much of the interpretation. We’ve seen in the past that it takes about 50% of the time to go through all of that data and to make sure, and work with peers to make sure the action that’s about to be taken is the correct one.

Of course, when building spacecraft, we need to be very cautious during the actions that we’re taking in the manufacturing process. With 50% of the time being spent just on interpreting the data, our leadership gave it a name—information overhead—and we set a goal to optimize it.

What sort of data are we talking about here? Assembly instructions?

SP: It spans across assembly, the manufacturing process and to assembly launch and test operations, as well as use cases in maintenance and sustainment. We felt that manufacturing was a great place to start because it’s kind of an easy place to measure. We can calculate how much time is being saved, and the other benefits of the technology.

What type of personnel wears and uses the AR in their jobs?

SP: It’s the technician on the shop floor along with the quality engineers who support them. And then these technicians and engineers work with us to build the AR content. They provide us information and feedback on what content needs to be built, and in some cases they also build the content.

For example, for position alignment. When we have any objects that have to be placed with 0.5” tolerance, such as fasteners, strain gauges, transducers, heaters or misters, accelerometers, for example, or hook-and-loop fasteners (There’s a lot of Velcro and tape on spacecraft!) Just for fasteners, we’re seeing about a $38 savings in touch labor per fastener. Lockheed purchases over 2 million fasteners per year, to put that in context. I think that capability applies to all of those other components that I just mentioned.

We’ve also seen drilling and torque applications. We’re working on activity right now which is the start-to-finish work instruction for the Orion crew seat module, to build up the crew seat from start to finish just with AR. So, we started off with shop aids that supplement pre-existing resources, and we’re still doing that, but we’re also adding in a work instruction that is really a start-to-finish assembly process.

There’s an old saying, “There’s no repair shop in space”. Quality is obviously important in this industry. What can you tell me about how AR is used in quality and inspection?

SP: When the quality department is looking to verify component placement in assembly, AR is a very quick and easy way to do that and also when they verify the work instruction in advance, it’s much quicker to do that through AR than to try to verify from traditional methods. Many of the support organizations for the manufacturing process are benefiting as well.

I imagine that it doesn’t require much, if any, training for the operators to interpret the content. Is that right?

SP: That’s correct, yes. Because it’s spatially referenced in the environment, they’re often seeing exactly what they expect. It’s just in a much more easily accessible format.

Who creates the AR content? And do they require special training to be able to generate AR content?

SP: It’s normally within the engineering and technology organization, and we have manufacturing engineers within that organization who build the content. Scope AR has a training package online, and as soon as the engineer has their computer configured, we point them to the online training. It takes about a day to go through that training and then they’re set. The content actually uses the same models as the original CAD for the assembly.

How does the time to create AR content compare to the time it would take to create more traditional training materials or instructions?

SP: We can create content much more rapidly in AR, and we’re seeing that in many activities. For example, in some cases we’re placing objects such as standoffs, strain gauges and transducers across curved surfaces, and we have typically used Mylar templates. With AR, we’ve had scenarios where we can build the AR content before the Mylar could be ready. So, there’s the process to create the work instruction in the traditional method, but then there’s also the process to create additional resources like the Mylar for that traditional process, or to align a laser projector, which can take weeks. We typically build these set position alignment capabilities in less than a day, sometimes in a couple of hours.

SM: If you think about creating traditional work construction, essentially you’re taking an idea, or a set of process steps, that have been invented in the brain of a mechanical engineer and then you’re going through a translation process to put them into words and images, and in Lockheed’s case special Mylar templates to just perform any instruction. And so that, takes up a lot of time and energy.

SM: When those traditional instructions get to the technician, they’re doing a mental mapping, reading those texts, deconstructing what to do with those images, and then using a pile of calculations to understand what they need to do, and where. This type of instruction introduces a lot of inefficiency from tracing the conceptual idea to translating it into words or images, translating it back from words and images, and on to the work. So, augmented reality is just a really intuitive way of translating the future that it’s conceptually in the mechanical engineer’s head in a much better more intuitive way and delivering that knowledge to a front end technician to carry out the instruction.

That’s interesting, because the instinct might be that AR would be useful for high volume production, where you make one up-front time investment to create content for the AR system, and then you use it multiple times. But for such low production volume as the space industry, it’s essential that it’s a quick, easy process to create the content.

SP: Yes. We have to have that in order to have a sufficient return on investment, for it to make sense. And what we’ve seen so far is we’re seeing positive ROI in first application. There are definitely additional advantages when we’re using it across high volumes, but we’re seeing value in initial build of spacecraft.

Shelley, you mentioned the laser projection technique as an existing alternative. That makes me wonder, with what kind of precision can you have an AR instruction align on a physical surface?

SP: We’re seeing 0.1” accuracy. We have many components on the aircraft that are at a 0.5” tolerance requirement, so that 0.1” half inch tolerance requirements well within range.

So, this isn’t just one lab trying out AR. It’s really a full deployment of the technology.

SP: Depending on how we phrase full deployment, yes. Full deployment is definitely within the roadmap.

Very exciting. So, what are the next steps? Where do you think Lockheed Martin could improve upon your use of AR technology?

SP: So, we’re looking at how the technology enables full digital threads. So, we’re looking at everything from early stages and concept design, and design through to manufacturing, assembly launch and test operations, launching host operations, maintenance and sustainment, even astronauts in space. We see benefits in having the data available across an entire life cycle, and not only for the flight data, but also for the test equipment and other aspects of our environments that support the manufacturing and operations of that resource.

We started with manufacturing because it’s the easiest to quantify and gives us a really good look at ROI, but it spans easily into those other areas, into design into operations, and other elements. Anytime people take paper or computer resources to transfer ideas to something in the real world, there’s opportunity for AR.

Scott, we understand how Lockheed Martin uses Scope AR. But what other manufacturing applications or industries can benefit from AR?

Scott Montgomerie, Scope AR Cofounder and CEO

SM: Yeah, I think Shelley provided a really great overview of aerospace. We’re really excited about our applications in aerospace. In really high volume, to go to the complete other extreme, we’re actually pretty strong in consumer products goods (CPG) manufacturing. Scope AR has a number of different use cases on assembly lines. Where we’re seeing a lot of benefit there is reducing downtime on the assembly line. Reducing errors in simple procedures can have a major impact on high volume manufacturing lines.

SM: For example, in clean facilities, where they make food products. With the high turnover of operator personnel, they’re fine doing their jobs day to day, but when something goes wrong and line breaks down, there’s not really somebody on the line who knows how to fix it. In the old world somebody would be able to fix it, but they’re not there in the clean room the entire time, so now they’re somewhere else and the time from things going wrong, to the time that person can actually get on site, and start diagnosing it and fixing can lead to an extended period of downtime. Using our remote assistance application, the frontline technician simply calls up the expert, who is somewhere else, and can begin diagnosing it immediately after an error, and the expert quite often doesn’t even need to come on site. Just a simple procedure can guide that frontline technician through how to do the repair, and get it back up and running much, much, quicker. I can tell you that in some cases we’re seeing, you know, about a 50% reduction in downtime with those types of use cases.

SM: And then secondarily, AR work instructions can prevent errors as well. So, again, frontline personnel often see high turnover and training is an issue. Simple mistakes such as installing something improperly can cause significant downtime. So, by using AR work instructions to show these frontline workers how to perform these tasks, they’re able to redue error rates and improve the outcomes that way, as well.

SP: And we see error prevention, too. Often you don’t know if an error was prevented, but we’ve seen proof on a number of activities, we’ve seen proof where AR has prevented an error from occurring. In the space industry, on some programs an error can lead to a day of delay, and a day of delay can be over $1 million cost per day.

To learn more about how AR is being used in engineering, check out Microsoft Unveils Its AR/VR Vision for the Future of Work.