Elon Musk says Yes. AI watchdog says No.

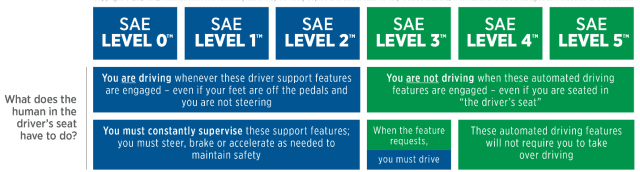

In Musk, Walter Isaacson’s biography of Elon Musk, we learn of how AI was to be used in Tesla vehicles to offer its long-awaited Full Self Driving mode. Year after year, Tesla promises its owners Full Self Driving (FSD), a hands-off-the-wheel, point-to-point driving, aka Level 4 autonomy, aka L4, but FSD remains in beta. That doesn’t stop Tesla from charging $199/month for it, though.

Level 4 autonomy, or L4, as defined by the Society of Automotive Engineers (SAE), is a hands-off-the-steering-wheel, vehicle drives itself from point A to point B mode. The only thing more magical, L5, is no steering wheel. No gas or brake pedal, either. L5 has been achieved by several companies but only for shuttles, such as Olli, a 3D-printed electric vehicle (EV), a highlight of IMTS 2016, the biggest manufacturing show in the U.S. However, Olli’s manufacturer, Local Motors, ran out of money and closed its doors in January 2022, a month after one of its vehicles that was being tested in Toronto ran into a tree.

The traditional approach to L4 has been programming for every imaginable traffic situation with an if-this-then-that nested algorithm. For example, if a car turns in front of the vehicle, then drive around—if the speeds allow it. If not, stop. Programmers have created libraries of thousands upon thousands of situations/responses … only to have “edge cases,” as unfortunate and sometimes disastrous events keep maddeningly coming up.

Teslas, and other self-driving vehicles, notably robotaxis, have come under increasing scrutiny. The National Highway Traffic Safety Administration (NHTSA) investigated Tesla for its role in 16 crashes with safety vehicles when the Tesla vehicles were in Autopilot or Full Self Driving mode. In March 2018, an Uber robotaxi with an inattentive human behind the wheel ran into a person walking their bike across a Tempe, Ariz. street and killed her. Recently, a Cruise robotaxi ran into a pedestrian and dragged her 20 feet. Attempts by self-driving vehicle companies to downplay such incidents, suggesting they are few and far between, that autonomous vehicles are a far safer alternative to humans that kill 40,000 every year in the U.S. alone, have been unsuccessful. It’s not fair, say the technologists. It’s zero tolerance, says the public.

Musk: I Have a Better Way

Elon Musk is hardly one to accept a conventional approach, such as the situation/response library. The creator of the move-fast-and-break-things movement, now the poster of every wannabe disruptor startup, knew he had a better way.

The better way was learning how the best drivers drove and then using AI to apply their behavior in the Tesla’s Full Self Driving mode. For this, Tesla had a clear advantage over its competitors. Since the first Tesla rolled into use, the vehicles have been sending videos to the company. In “The Radical Scope of Tesla’s Data Hoard,” IEEE Spectrum reports on the data Tesla vehicles have been collecting. While many modern vehicles are sold with black boxes that record pre-crash data, Tesla vehicles goes the extra mile, collecting and keeping extended route data. This came to light when Tesla used the extended data to exonerate itself in a civil lawsuit. Tesla was also suspected of storing millions of hours of video—petabytes of data. This was revealed in Musk’s biography, where Musk realizes that the video could serve as a learning library for Tesla’s AI, specifically its neural networks.

From this massive data lake, Tesla employees identified the best drivers. From there, it was simple: train the AI to drive like the good drivers drive. Like a good human driver, Teslas would then be able to handle any situation, not just the ones in the situation/response libraries.

Mission Possible?

Whether it is possible for AI to replace a human behind the wheel still remains to be seen. Tesla still charges thousands a year for Full Self Driving but has failed to deliver the technology. Tesla has been passed by Mercedes, which attained Level 3 autonomy with its fully electric EQS vehicles this year.

Meanwhile, opponents of AVs and AI grow stronger and louder. Here in San Francisco, Cruise was practically drummed out of town after the October 2nd incident in which it (allegedly) failed to show the video from one of its vehicles dragging a pedestrian who was pinned underneath the car for 20 feet.

Even some stalwart technologists have crossed to the side of safety. Musk himself, despite his use of AI in Tesla, has condemned AI publicly and forcefully, saying it will lead to the destruction of civilization. What do you expect from a sci-fi fan, as Musk admits to being, but from a respected engineering publication? In IEEE Spectrum, we have a former fighter pilot turned AI watchdog, Mary L. “Missy” Cummings warning us of the dangers of using AI in self-driving vehicles.

In What Self-Driving Cars Tell Us About AI Risks, Cummings issues guidelines for AI development, using examples of autonomous vehicles. Whether situation/response programming constitutes AI in the way the term is used can be debated, so let us give Cummings a little room.

We Have a Hard Stop

Whatever your interpretation of AI, autonomous vehicles serve as examples of machines under the influence of software that can behave badly—badly enough to cause damage or hurt people. The examples range from inexcusable to understandable. An example of inexcusable is an autonomous vehicle running into anything ahead of it. That should never happen. No matter if the system misidentifies a threat or obstruction, or fails to identify it altogether and, therefore, cannot predict its behavior, if a mass is detected ahead and the vehicle’s present speed would cause a collision, it must slam on the brakes. No brakes were slammed on when one AV ran into the back of an articulated bus because the system had identified it as a normal, that is, shorter, bus.

Phantom braking, however, is totally understandable—and a perfect example of how AI not only fails to protect us but also actually throws the occupants of AVs into harm’s way, argues Cummings.

“One failure mode not previously anticipated is phantom braking,” writes Cummings. “For no obvious reason, a self-driving car will suddenly brake hard, perhaps causing a rear-end collision with the vehicle just behind it and other vehicles further back. Phantom braking has been seen in the self-driving cars of many different manufacturers and in ADAS [Advance Driver Assistance Systems]-equipped cars as well.”

Backing up her claim, Cummings cites a NHSTA report that says rear-end collisions happen exactly twice as often with autonomous vehicles (56%) than with all vehicles (28%).

“The cause of such events is still a mystery. Experts initially attributed it to human drivers following the self-driving car too closely (often accompanying their assessments by citing the misleading 94 percent statistic about driver error). However, an increasing number of these crashes have been reported to NHTSA. In May 2022, for instance, the NHTSA sent a letter to Tesla noting that the agency had received 758 complaints about phantom braking in Model 3 and Y cars. This past May, the German publication Handelsblatt reported on 1,500 complaints of braking issues with Tesla vehicles, as well as 2,400 complaints of sudden acceleration.”